In the previous post, the procedures to compute a statistic for six common normality tests and how to obtain the respective p-values using asymptotic approximations were briefly reviewed. In this post, it is shown that these approximations, that appear in classic statistical textbooks and papers, are not entirely accurate for arbitrary sample sizes or nominal levels of significance.

For each test, 1,000,000 realisations of normal distributions were simulated using matlab, computed analytically the p-values, and plotted their frequencies at significance intervals of 0.01, with 34 different sample sizes, ranging from 10 to 10,000 (more specifically: 10, 15, 20, 25, 30, 40, 50, 60, 70, 80, 90, 100, 150, 200, 250, 300, 400, 500, 600, 700, 800, 900, 1000, 1500, 2000, 2500, 3000, 4000, 5000, 6000, 7000, 8000, 9000, 10000). The best approximations should produce flat series of bars between p-values 0-1, and be stable across sample sizes, particularly at the most common significance levels: near or below p=0.05.

In general, any test can be analysed according to power, robustness to sample size, ability to correctly reject some specific types of distributions and other parameters. The analyses presented here refer exclusively to the ability to reject the null when the null is true at the nominal significance level using analytical approximations. Poor performance in this analysis does not imply that the test, per se, is poor. It only implies that the approximation used to derive the p-values is not accurate. The tests may still be very powerful to detect non-normality, but accurate p-values must then rely on tables produced via Monte Carlo simulations, which are, by definition, always exact.

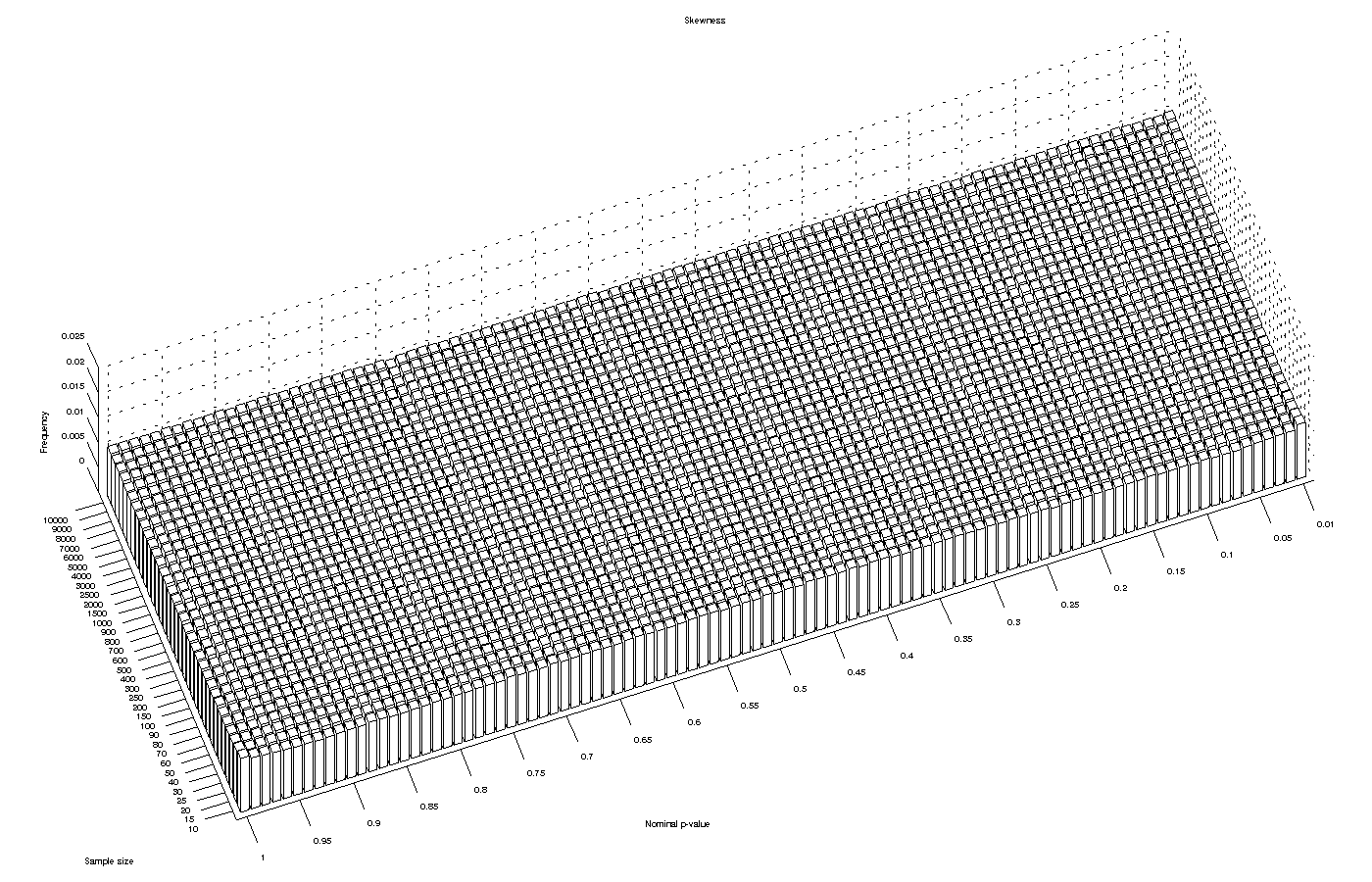

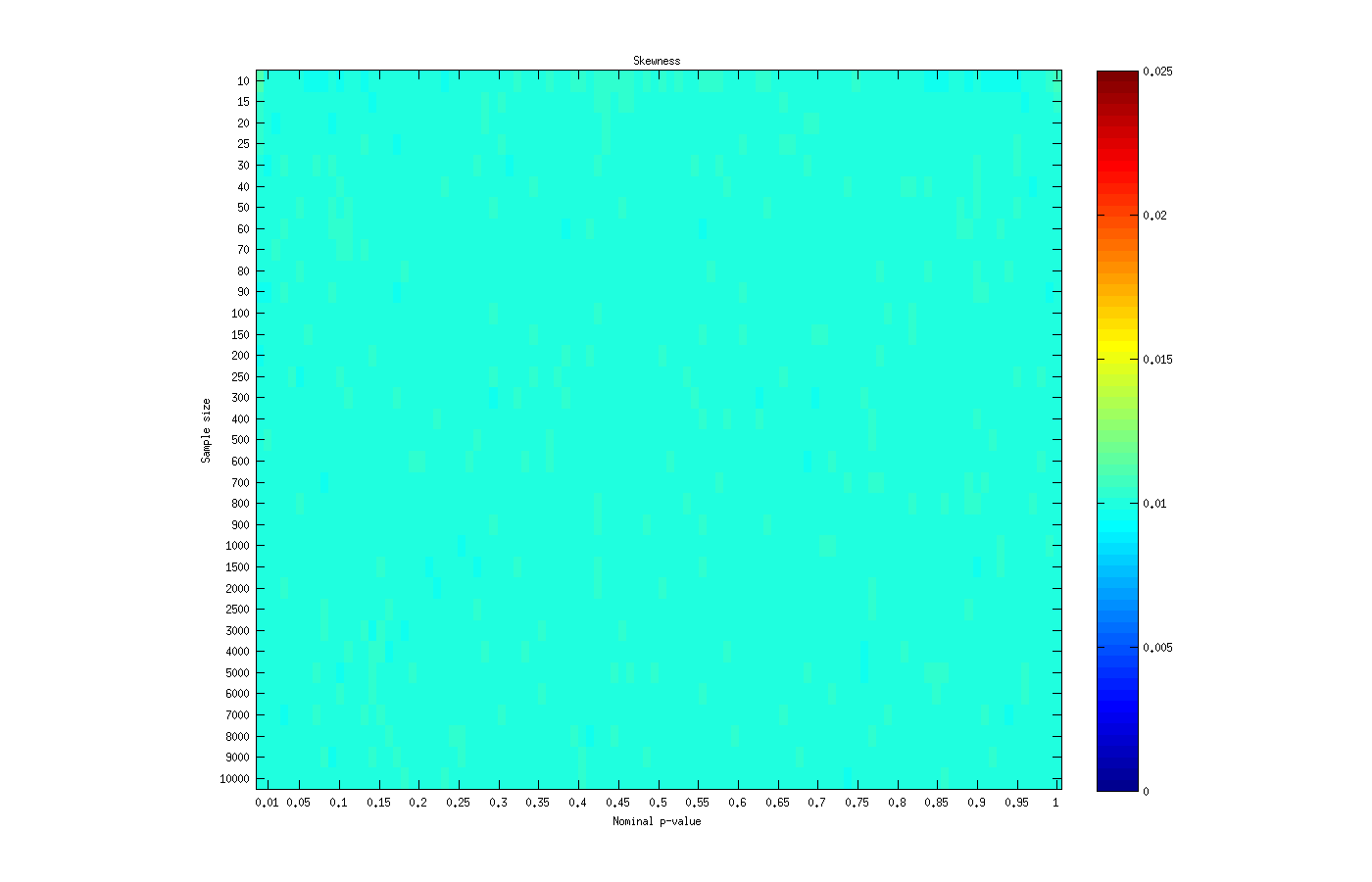

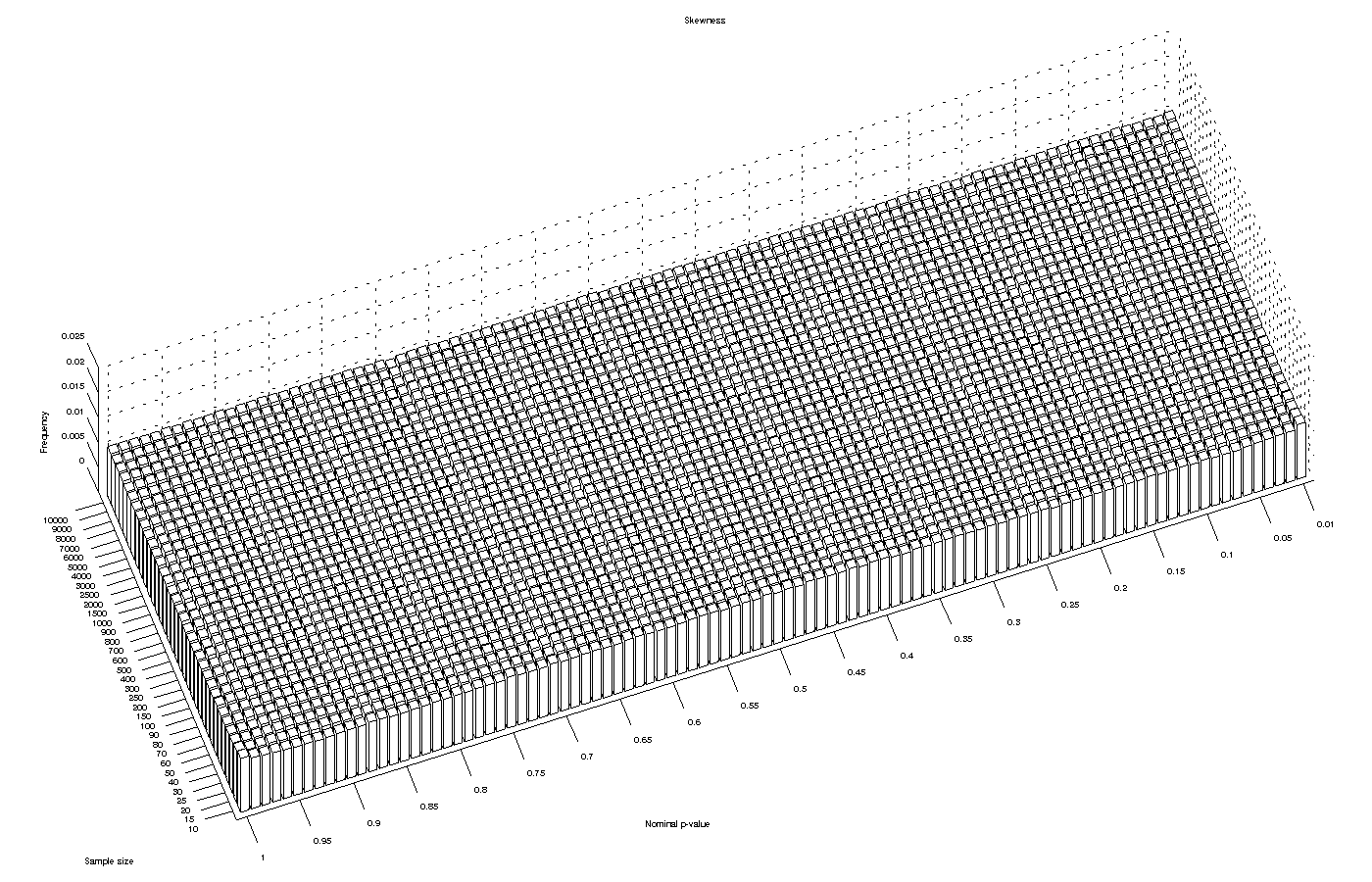

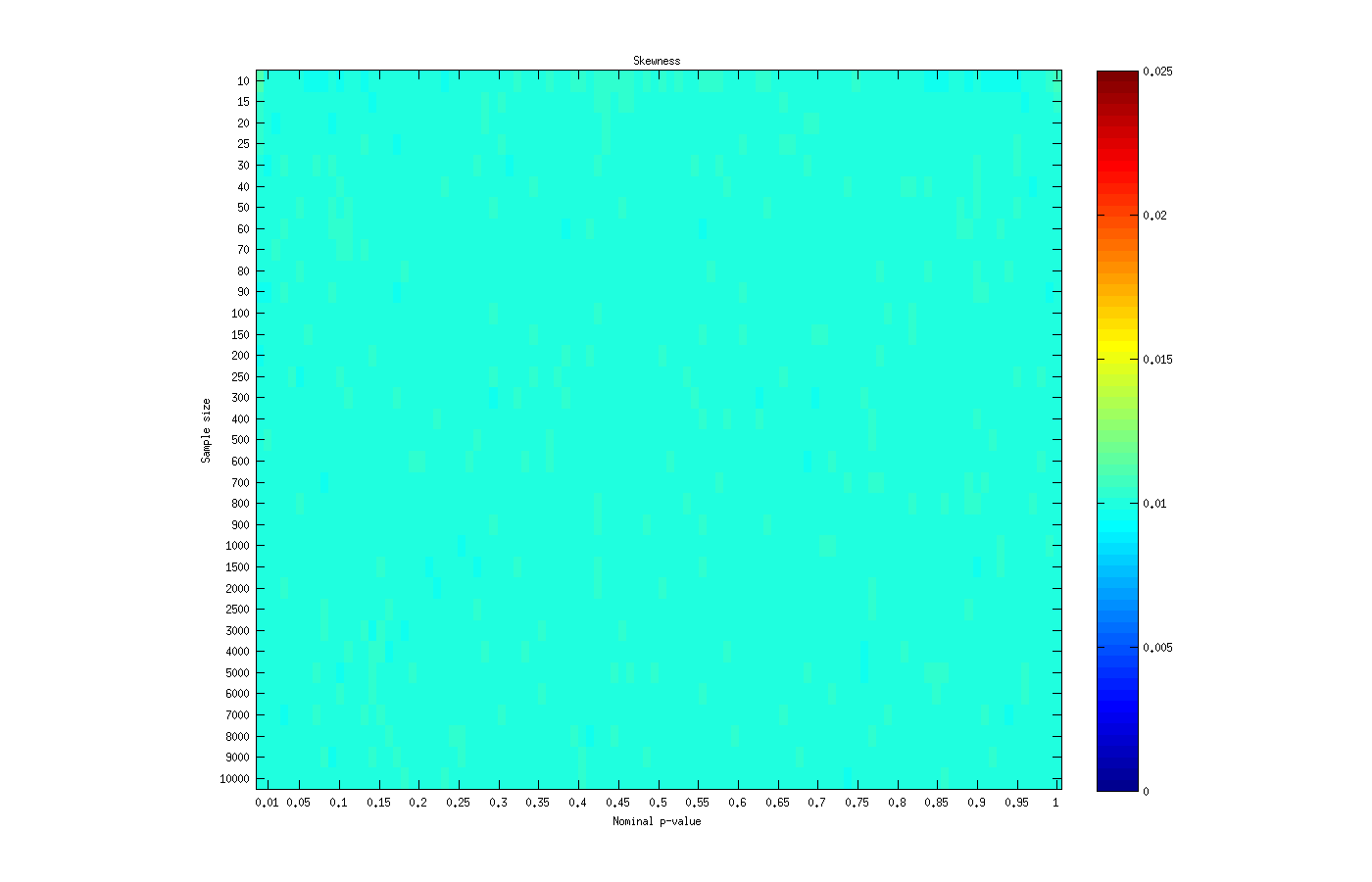

Skewness test

The skewness test is perhaps the one that performed better in terms of asymptotic approximation to a p-value (which does not imply that this test is at all more powerful or has any other feature that would qualify it as better than the others). The approximation produces p-values that are virtually identical to the nominal p-values for any of the sample sizes.

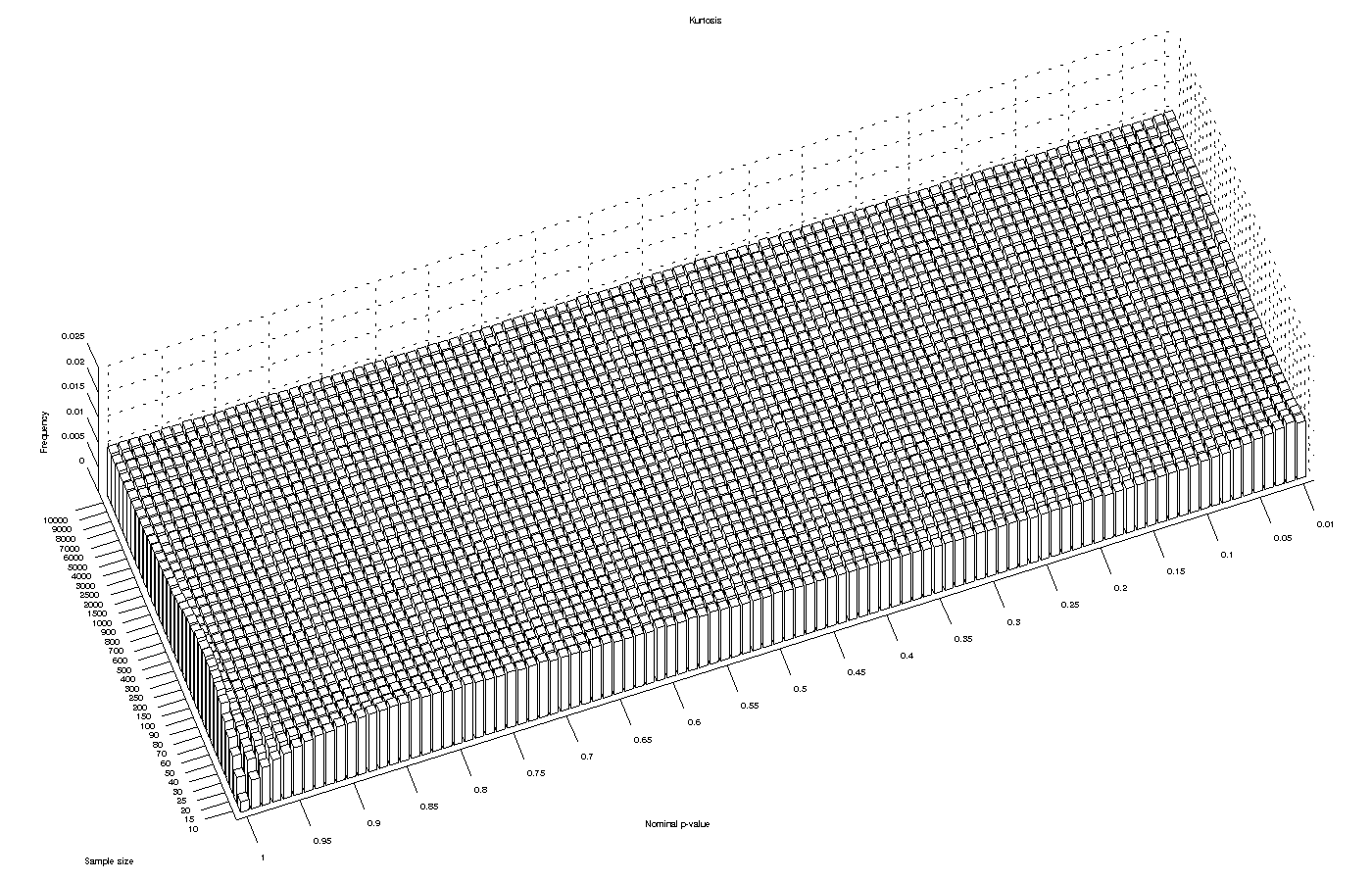

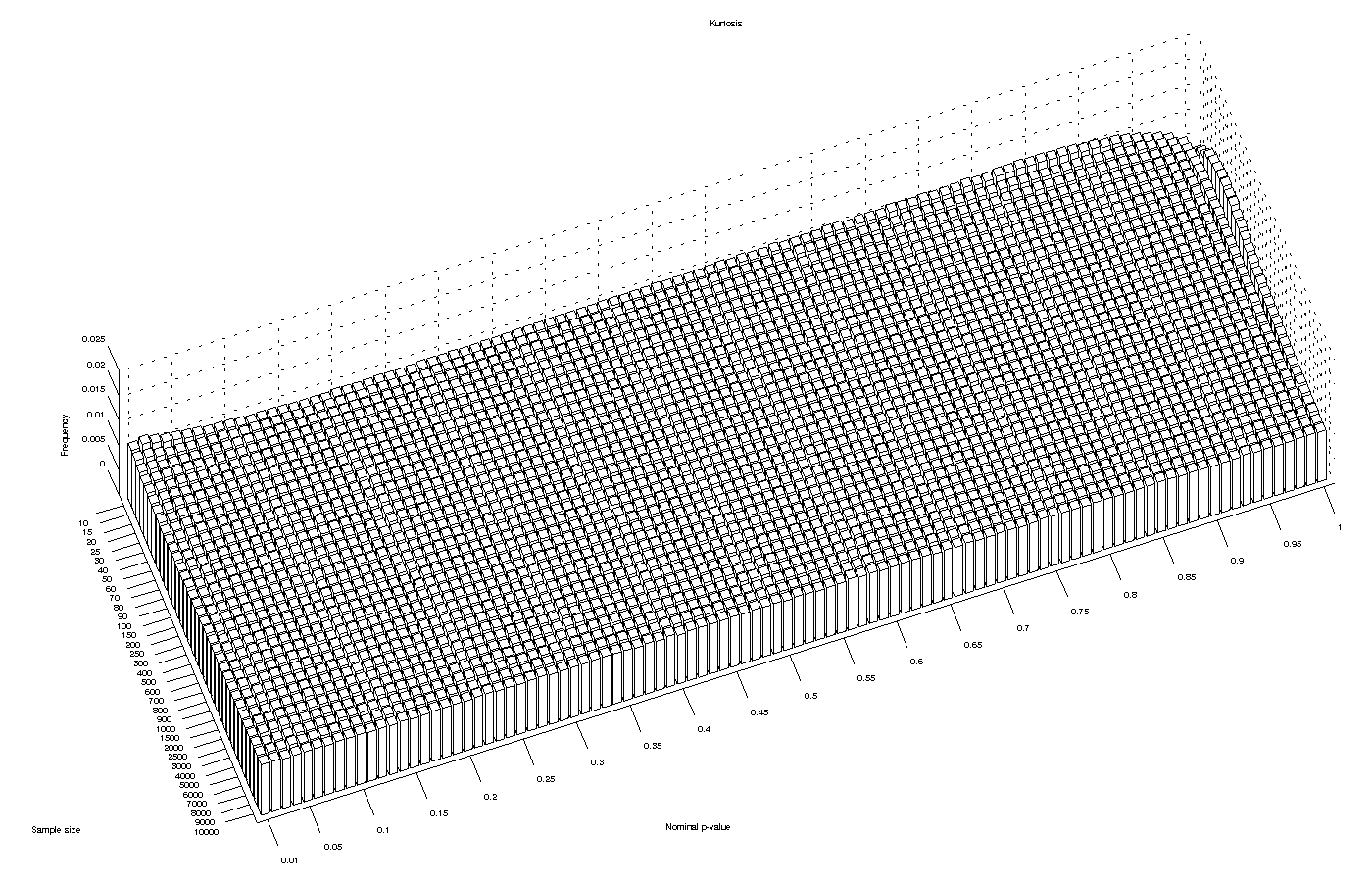

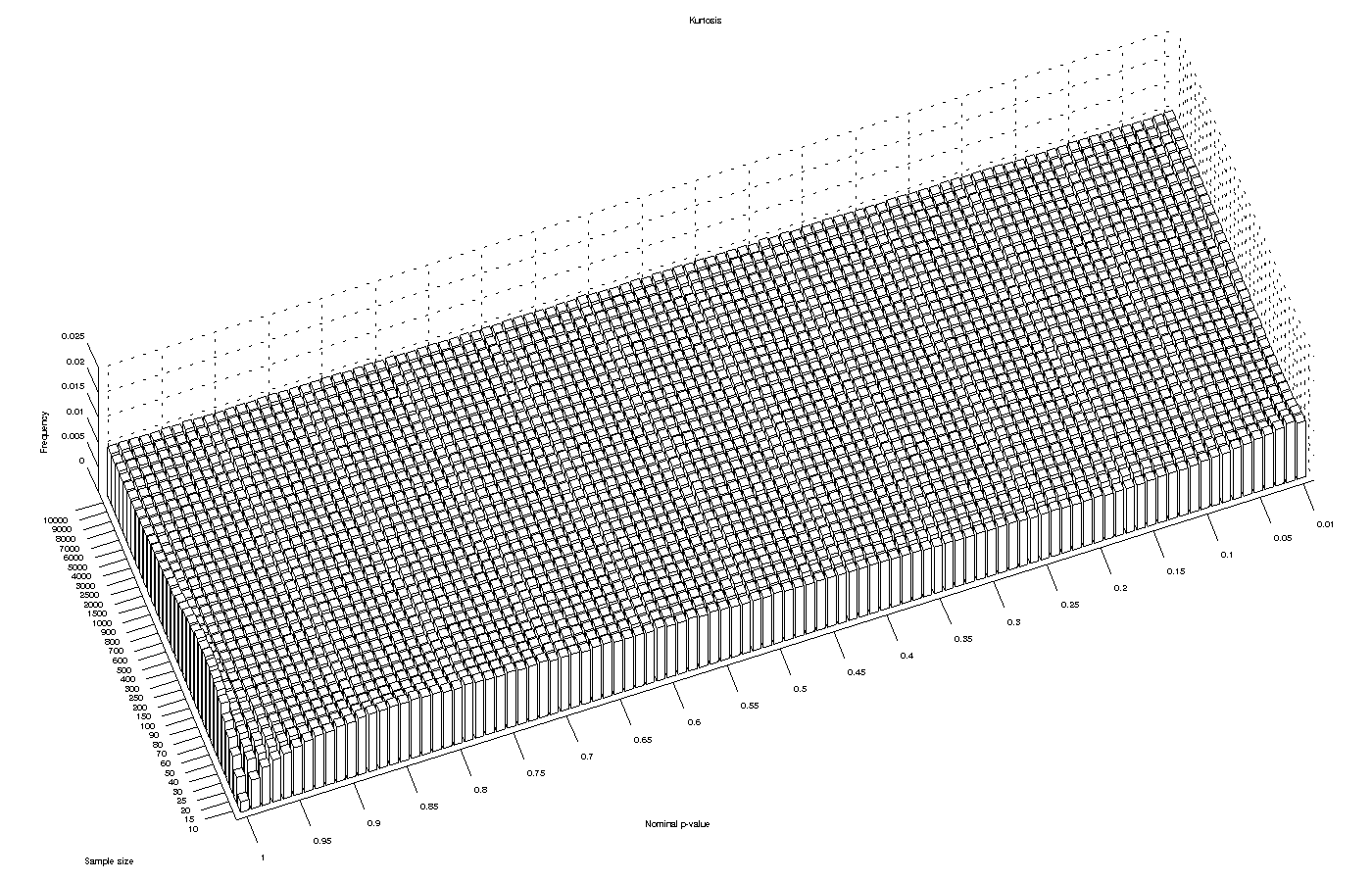

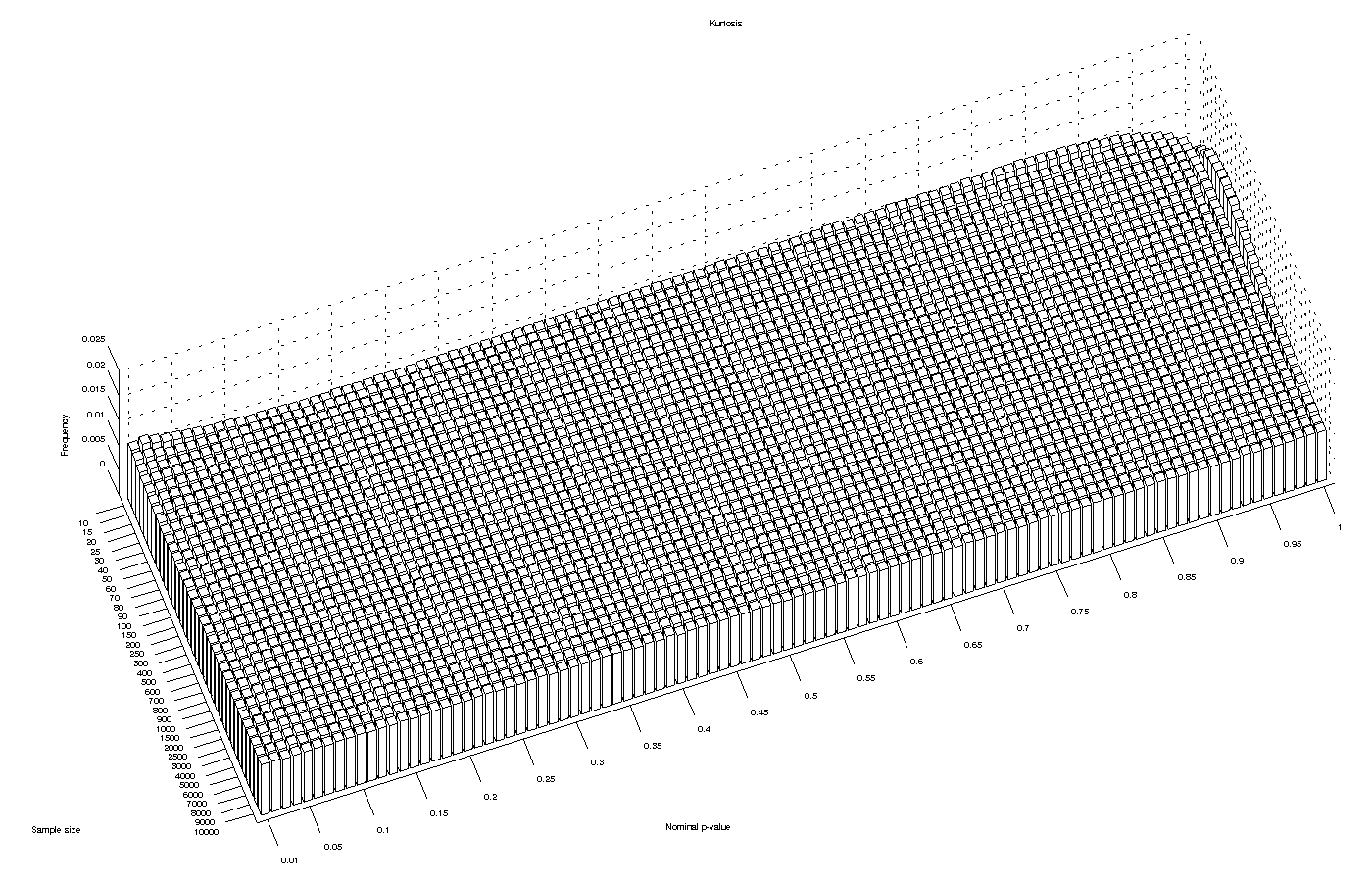

Kurtosis test

The asymptotic approximation for the kurtosis test performed reasonably well for most significance levels and for large sample sizes. However, it produced an excess of low p-values for sample sizes smaller than 20, implying that the test can be anti-conservative with small samples.

D’Agostino-Pearson omnibus test

The p-value approximation for the D’Agostino-Pearson  statistic had a poor and erratic behaviour for sample sizes smaller than 200, particularly at the low significance levels, which are generally the ones of greatest interest. For this test, Monte Carlo simulations to derive the critical values are highly recommended.

statistic had a poor and erratic behaviour for sample sizes smaller than 200, particularly at the low significance levels, which are generally the ones of greatest interest. For this test, Monte Carlo simulations to derive the critical values are highly recommended.

Jarque-Bera test

Another test for which the asymptotic approximation was poor is the Jarque-Bera test. The approximation to the distribution of the  statistic is not valid for small p-values for any of the sample sizes tested, although for sample sizes >1500 the behaviour was reasonably stable. Monte Carlo simulations also more appropriate for this test.

statistic is not valid for small p-values for any of the sample sizes tested, although for sample sizes >1500 the behaviour was reasonably stable. Monte Carlo simulations also more appropriate for this test.

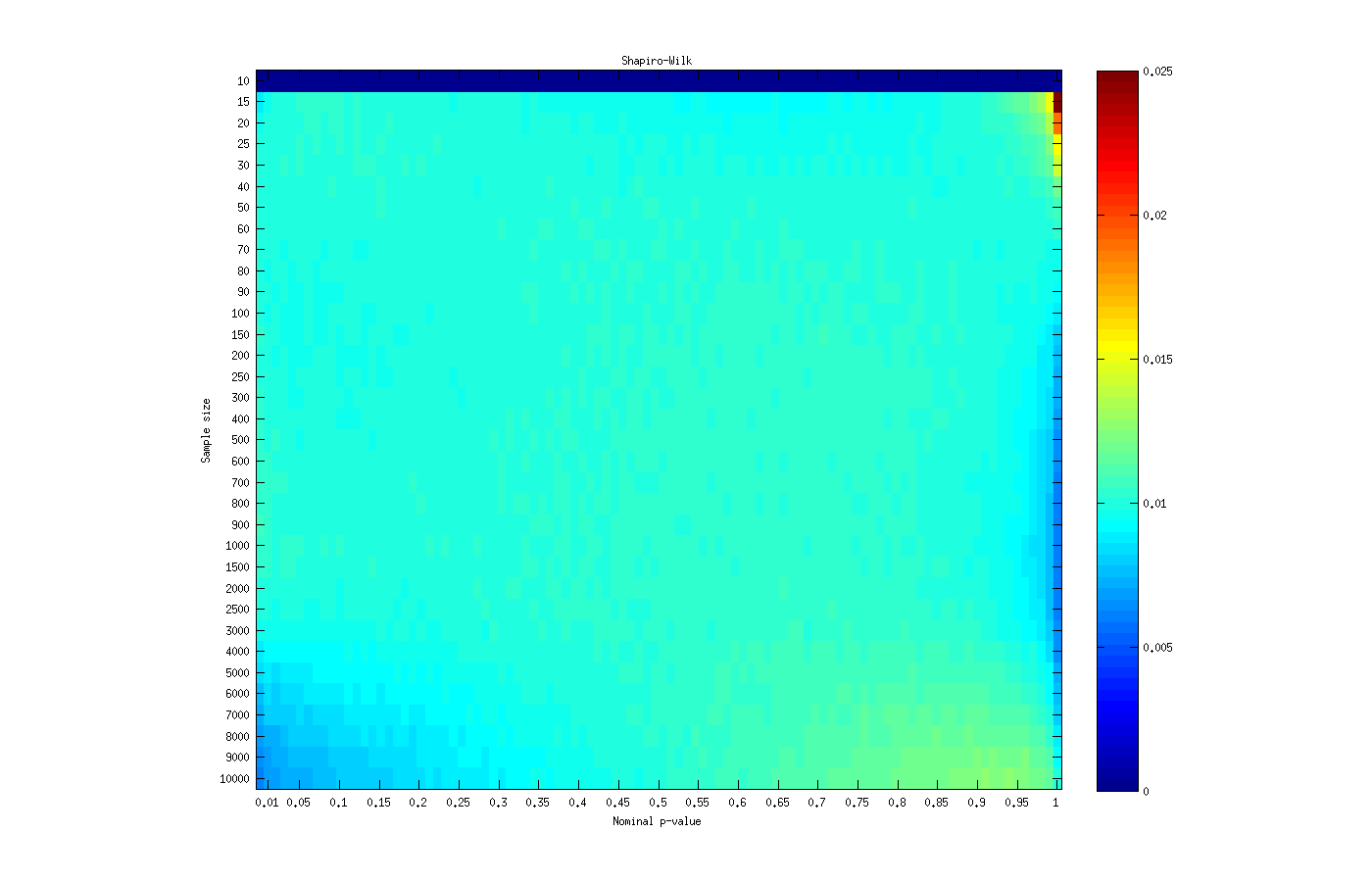

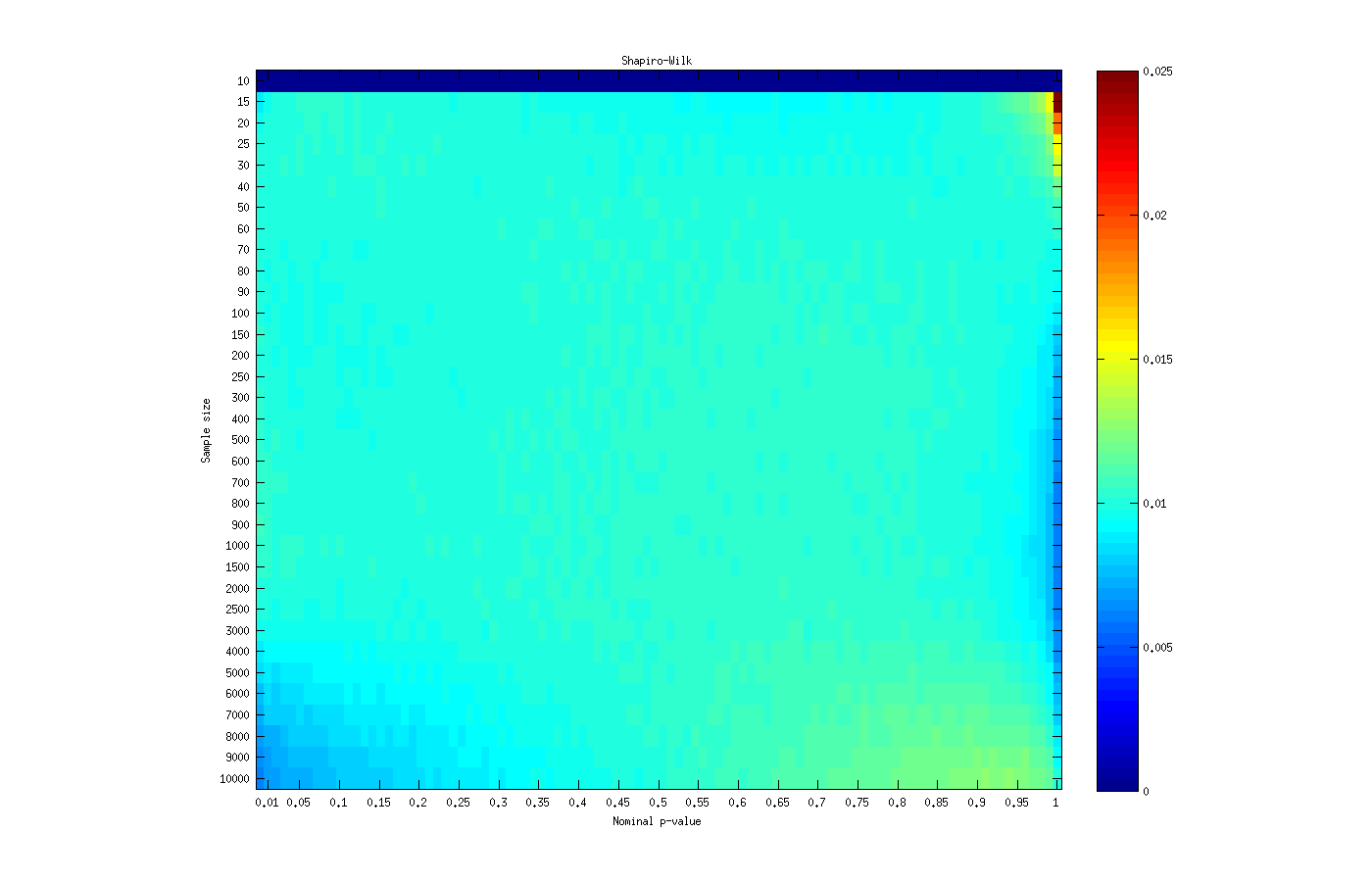

Shapiro-Wilk test

The asymptotic approximation to the distribution of the Shapiro-Wilk’s  statistic seems adequate for sample sizes larger than 15 and smaller than 4000, being slightly anticonservative for most p-values at sample sizes smaller than 20. It has aberrant behaviour at p-values close to 1, which are generally of no interest anyway.

statistic seems adequate for sample sizes larger than 15 and smaller than 4000, being slightly anticonservative for most p-values at sample sizes smaller than 20. It has aberrant behaviour at p-values close to 1, which are generally of no interest anyway.

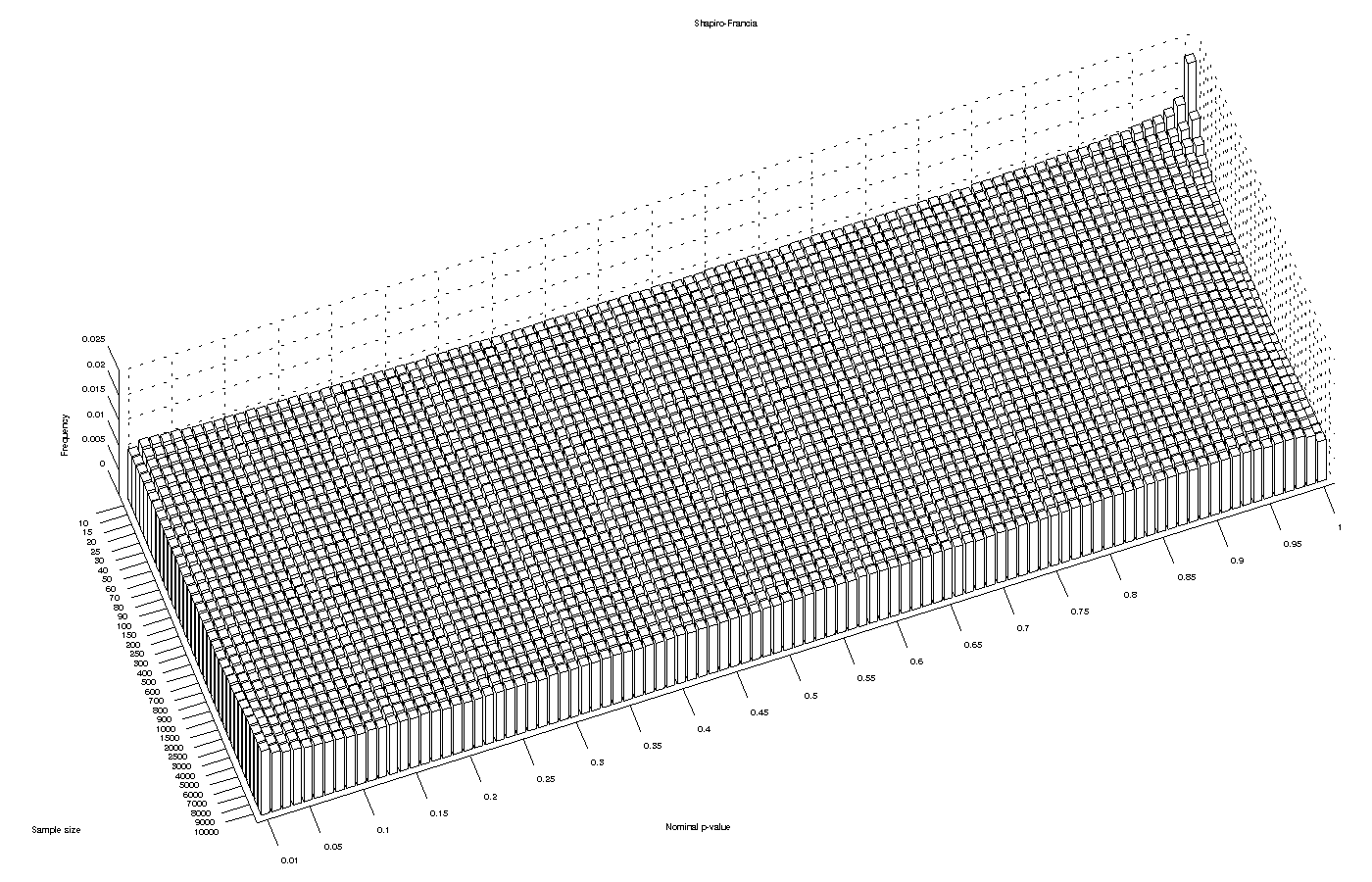

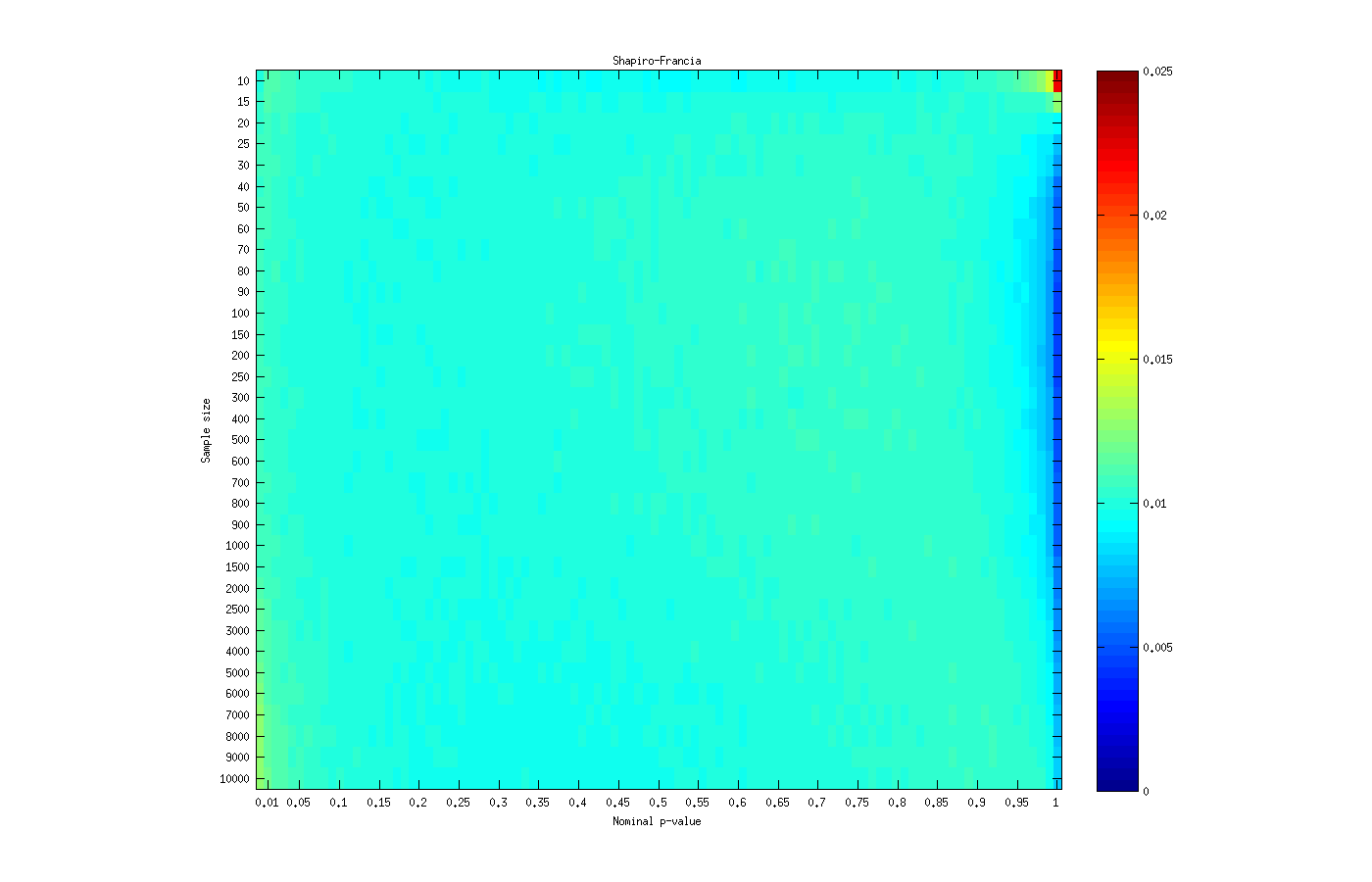

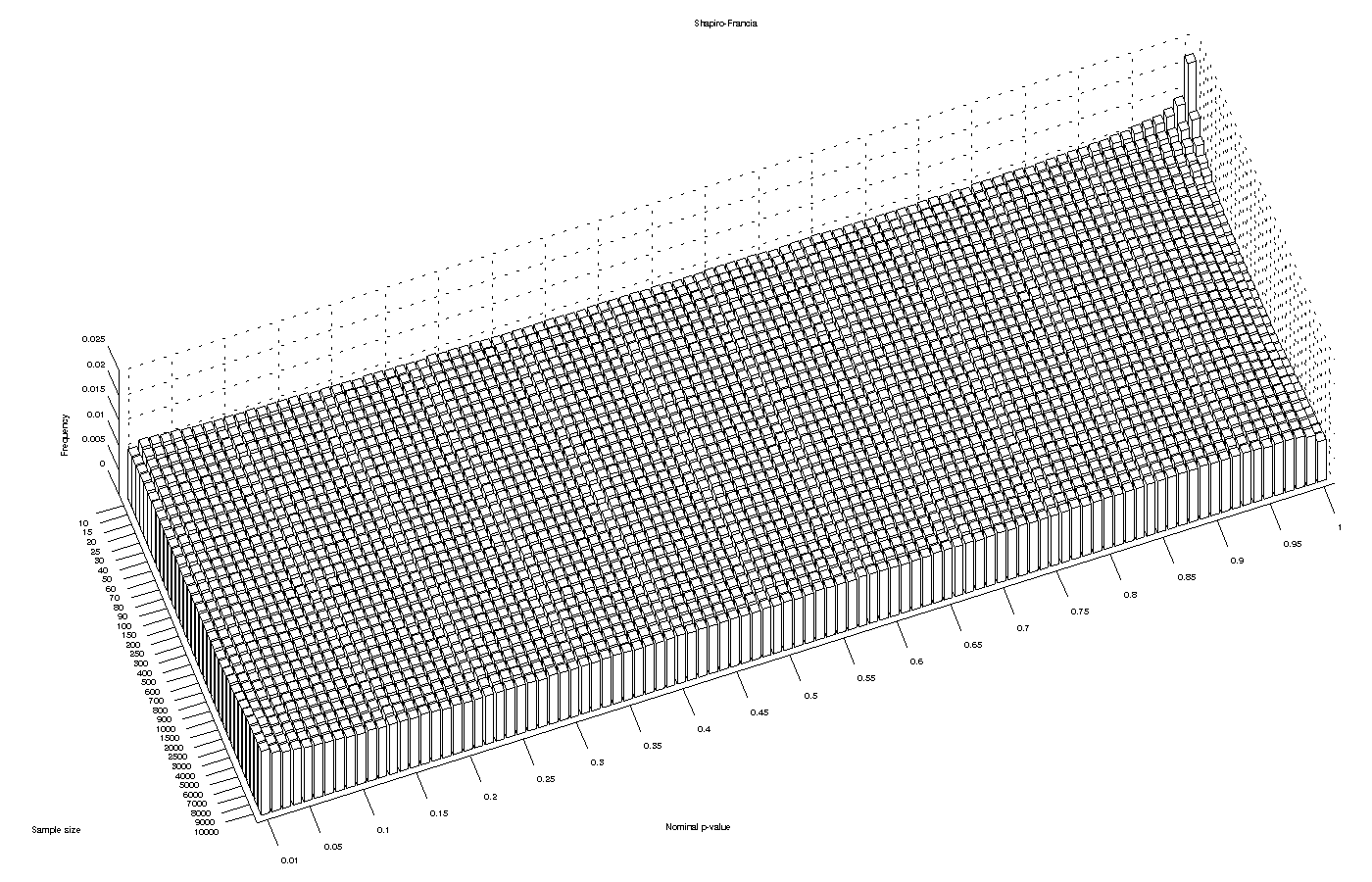

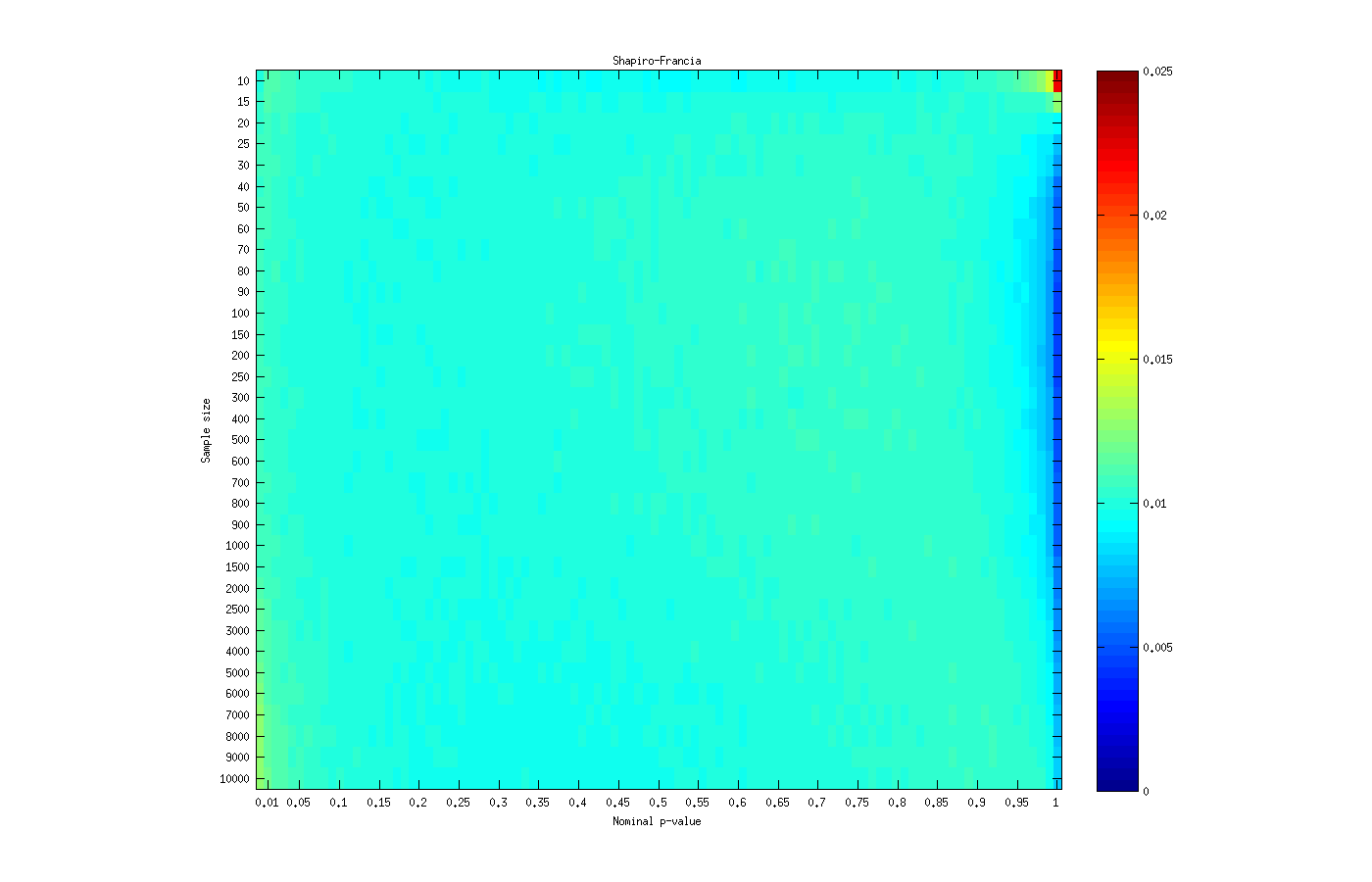

Shapiro-Francia test

For the Shapiro-Francia’s  statistic, the asymptotic behaviour is similar to the observed for the Shapiro-Wilk test, except that it remains valid for sample sizes larger than 4000.

statistic, the asymptotic behaviour is similar to the observed for the Shapiro-Wilk test, except that it remains valid for sample sizes larger than 4000.

Other tests

Other popular normality or distributional tests, such as the Kolmogorov-Smirnov test, the Lilliefors test, the Cramér-von Mises criterion or the Anderson-Darling test, do not have well established asymptotic approximations and, as a consequence, depend on Monte Carlo simulations to derive critical values. The p-values for these tests are always accurate, as long as the simulations were performed adequately.

Conclusion

Care should be taken when using formulas that approximate the distribution for the statistic for these tests. The approximations are not perfectly valid for all sample sizes, and can be very inaccurate in many cases. When in doubt, critical values based on Monte Carlo tests might be preferred.

:

. Plotting the transformation as a function of the original data

and the parameter

produces the following:

allows one to estimate also how closer to normal or log-normal certain measurements are. If

, the original data tend to be log-normally distributed, whereas if

, the data can be considered approximately more normally distributed.